On September 29, 2025, California made history. Governor Gavin Newsom signed SB 53, the first U.S. state law specifically targeting frontier AI models; systems like GPT-4o, Claude, and Gemini that power much of today’s generative AI ecosystem.

With this move, California addressed a gap in federal regulation by establishing new obligations for the most powerful AI developers. The law requires large-scale model providers to:

- Publish safety frameworks

- Conduct and disclose risk assessments

- Report critical incidents

- Extend whistleblower protections

For the first time, U.S. companies building frontier AI must meet codified state-level safety requirements, marking a decisive shift in America’s AI governance landscape.

A Policy Turnaround in Sacramento

Just a year ago, Governor Newsom vetoed SB 1047, a similar bill criticized for being too broad and economically disruptive. That bill included steep penalties, 72-hour incident reporting requirements, and even mandatory “kill switches.”

This time, the governor signed a more focused bill that drops some of the more controversial provisions while still addressing “catastrophic risk”. The shift reflects two realities:

- Increased public pressure to act on AI safety..

- States are filling the gaps left by a lack of federal policy..

State-by-State Innovation in AI Regulation

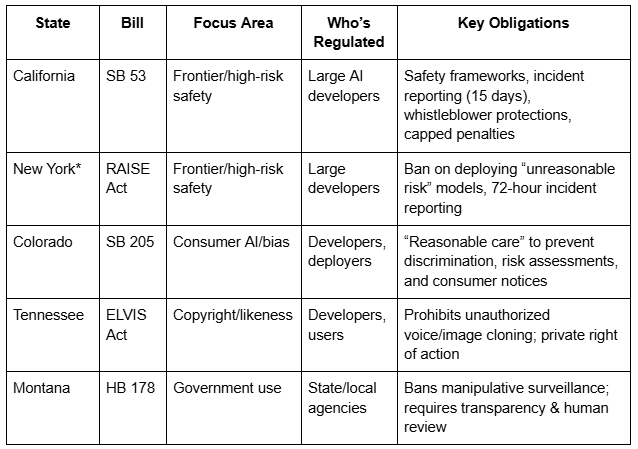

California isn’t alone. Across the country, states are experimenting with different approaches to AI governance:

(*pending governor’s signature)

Each of these laws responds to pressing local concerns—artists fear unauthorized cloning, consumers fear bias, voters fear deepfakes. States are proving faster and more experimental than Washington in tackling AI’s immediate risks.

The Power—and Limits—of State Action

State legislation has long shaped national and even global regulatory frameworks. Consider California’s Consumer Privacy Act (CCPA): passed in 2018, it reshaped U.S. data protection, influenced federal debates and has influenced data privacy regulations abroad.

AI is following the same trajectory. In 2025, every U.S. state introduced AI-related bills, and 38 states enacted over 100 measures. Yet this surge raises real challenges:

- Fragmentation: Different rules in different states create uncertainty for developers and uneven protections for users.

- National security risk: Adversaries exploit weak or inconsistent regulatory regimes.

- Global influence: Without a coherent federal framework, U.S. leadership in AI governance may falter.

The reality: AI doesn’t stop at state borders. A frontier model trained in San Francisco can be used in Boston, Honolulu, or New Orleans immediately.

What Comes Next and Why it Matters

Even as federal discussions refocus on coordination and voluntary frameworks, states are setting binding precedents that will likely shape national and even global norms.

At the state level, companies should expect to see:

- Rapidly changing obligations across multiple jurisdictions.

- Continued public and regulatory scrutiny of safety, bias, and transparency.

- Rising expectations to demonstrate responsible AI practices proactively.

What happens in California won’t stay in California. These early laws will inform national debates, influence global approaches, and redefine how AI accountability is measured. OpenPolicy remains at the center of that dialogue—translating emerging policies into actionable strategies for responsible growth.